Robots.txt disallow pdf files

19/04/2018 · A Robots.txt file is a special text file that is always located in your Web server’s root directory. This file contains restrictions for Web Spiders, telling them where they have permission to search. It should be noted that Web Robots are not required to respect Robots.txt files, but most well-written Web Spiders follow the rules you define.

It seems to imply that you can you use Noindex: directives in addition to the standard Disallow: directives in robots.txt. Disallow: /page-one.html Noindex: /page-two.html Seems like it would prevent search engines from crawling page one, and prevent them from indexing page two.

A Robots.txt file is a text file associated with your website that is used to tell the search engines which of your website’s pages you would and would not like them to visit.

28/11/2010 · I’ve modified my robots.txt file to tell search engines to ignore the login screen, download able files, confirmation screens ext. Things that I don’t want searchable because there’s no need for it to on a search engine really.

Pages that you disallow in your robots.txt file won’t be indexed, and spiders You can find more information on robots.txt files on Robotstxt.org. Almost all the major sites use a robots.txt file. Just punch in a URL and add robots.txt to the end to find out if a site uses it or not. It will display their robots.txt file in plain text so anyone can read it. Remember that the robots.txt

3: Robots.txt Maker; Allow and Disallow folders and files and more. Built-In FTP Access. Now you can easily notify robots which of your files and directories to include and exclude from indexing.

For example, if you want to close all PDF files on your website, you have to write the following instruction in Robots.txt: Disallow: /*.pdf$ An asterisk before the file extension means any sequence of characters (any name), and the dollar sign at the end indicates that the index prohibition applies only to files ending with .pdf .

29/01/2015 · The bing developer forums on MSDN were closed and support moved to this forum, I think around 6 months ago. But then this issue has nothing to do with Bing development.

Disallow: /ebooks/*.pdf → In connection with the first line, this link means that all web crawlers should not crawl any pdf files in the ebooks folder within this website. This means search engines won’t include these direct PDF links in search results.

Bạn đã cấu hình file robots.txt của mình đúng hay chưa? Bài viết này sẽ giúp bạn có một cái nhìn đúng về robots.txt là gì? và cấu hình cho phù hợp, hạn chế …

I moved the /files/ disallow to the bottom and ran a test on one PDF file in the files directory and it returned Success. How can I fix this issue? We do not want anything in this directory being indexed.

Robots.txt disallow It’s very important to know that the “Disallow” command in your WordPress robots.txt file doesn’t function exactly same as the noindex meta tag on a page’s header. Your robots.txt blocks crawling, but not necessarily indexing with the exception of website files such as images and documents. Search engines still can

The Robots.txt file is one of the easiest files to understand. The four main properties used in Robots.txt are User-agent, Disallow, Allow and Sitemap (though other properties exist such as Deny, Crawl-delay and Request-rate).

robots.txt the ultimate guide Yoast

Robots.txt Specifications Search Google Developers

From what I can see, Google shows 182,000 robot.txt files in their index – [inurl:robots.txt filetype:txt] is the query I was studying. By posting that specific query, I am making an exception to our usual policy of “no specific searches”, but I feel it is generic enough – there are no keywords involved – to allow an exception in this case.

In another case, a SEO company edited the robots.txt file to disallow indexing of all parts of a website after the site’s owner stopped paying the SEO company. I also remember reviewing a company’s website and noticing that several directories that were part of their former site were disallowed in their robots.txt file.

Now, Create ‘robots.txt’ file at your root directory. Copy above text and paste into the text file. Robots.txt Generator generates a file that is very much opposite of the sitemap which indicates the pages to be included, therefore, robots.txt syntax is of great significance for any website.

A robots.txt file provides search engines with the necessary information to properly crawl and index a website. Search engines such as Google, Bing, Yahoo, etc all have bots that crawl websites on a periodic basis in order to collect existing and / or new information such as …

The robots.txt file is one of the main ways of telling a search engine where it can and can’t go on your website. All major search engines support the basic functionality it offers, but some of them respond to some extra rules which can be useful too.

The robots.txt file is a simple text file placed on your web server which tells webcrawlers like Googlebot if they should access a file or not. Basic robots.txt examples Here are some common robots.txt setups (they will be explained in detail below).

The Definitive Guide To Creating A Robots.txt File For Your Website Click To Tweet What is a Robots.txt file? Back in the early days of the internet, programmers and engineers created ‘robots’ or ‘spiders’ to crawl and index pages on the web.

As we wrap up our robots.txt guide, we want to remind you one more time that using a Disallow command in your robots.txt file is not the same as using a noindex tag. Robots.txt blocks crawling, but not necessarily indexing. You can use it to add specific rules to shape how search engines and other bots interact with your site, but it will not explicitly control whether your content is indexed

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are matched against the …

Using your robots.txt file to disallow specific files or folders is the simplest, is the most basic way to use it. However, you can get more precise and efficient in your code by making use of the wildcard in the disallow …

User-agent: * Disallow: /search Allow: /search/about Allow: /search/static Allow: /search/howsearchworks Disallow: /sdch Disallow: /groups Disallow: /index.html?

You might be surprised to hear that one small text file, known as robots.txt, could be the downfall of your website. If you get the file wrong you could end up telling search engine robots not to crawl your site, meaning your web pages won’t appear in the search results.

In this case, you should not disallow the page in robots.txt, because the page must be crawled in order for the tag to be seen and obeyed. Set X-Robots-Tag header with noindex for all files in the folders.

Even though the robots.txt file was invented to tell search engines what pages not to crawl, the robots.txt file can also be used to point search engines to the XML sitemap. This is supported by Google, Bing, Yahoo and Ask.

In short, a Robots.txt file controls how search engines access your website. This text file contains “directives” which dictate to search engines which pages are to “Allow” and “Disallow…

I have several PDF files uploaded on WordPress (via Media Uploader), that should not be indexed by Google. The only solution I could find was to add the filenames on my robots.txt, denying robots to crawl these files.

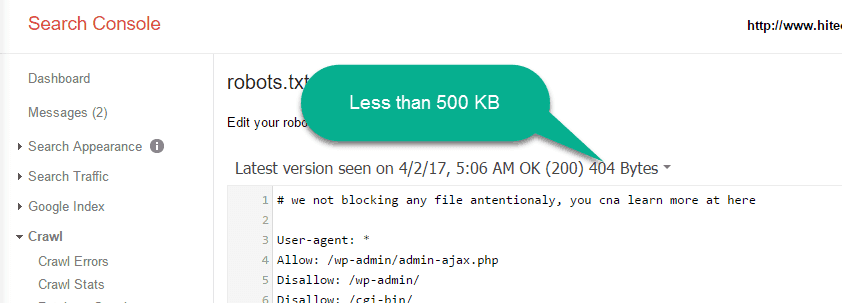

One of these tools is a robots.txt checker, which you can use by logging into your console and navigating to the robots.txt Tester tab: Inside, you’ll find an editor field where you can add your WordPress robots.txt file code, and click on the Submit button right below.

ok, so I remove the pdf files using robots.txt and or GWMT. (current tests show this is not yet working and it is a couple of weeks along – but hey, I can wait.)

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are …

Some of the Internet’s most important pages from many of the most linked-to domains, are blocked by a robots.txt file. Does your website misuse the robots.txt file, too? Find out how search engines really treat robots.txt blocked files, entertain yourself with a few seriously flawed live examples, and learn how to avoid the same mistakes yourself.

Unfortunately, there is no way to disallow spidering of a certain file type, so you must list each file if you want to use robots.txt without a special directory for banned files. Google supports an extension to the robots.txt standard that allows you to keep it from spidering PDF files.

The default prestashop robots.txt contains the directive : Disallow: */modules/ However, this is causing the top banner and image slider images to be blocked. These images reside in sub folders of the theme configurator and image slider modules.

Serious Robots.txt Misuse & High Impact Solutions Why

13/09/2018 · The robots.txt file must be in the top-level directory of the host, accessible though the appropriate protocol and port number. Generally accepted protocols for robots.txt (and crawling of websites) are “http” and “https”. On http and https, the robots.txt file is fetched using a HTTP non-conditional GET request.

robots.txt is a text file that’s stored in the root directory of a domain. By blocking some or all search robots from selected parts of a site, these files allow website operators to control search engines’ access to websites.

# # robots.txt # User-agent: * Crawl-delay: 10 # Directories Disallow: /includes/ Disallow: /misc/ Disallow: /modules/ Disallow: /profiles/ Disallow: /scripts

Robots.txt file serves to provide valuable data to the search systems scanning the Web. Before examining the pages of your site, the searching robots perform verification of this file. Explore how to test robots.txt with Google Webmasters. Сheck the indexability of a particular URL on your website.

robots.txt does not prevent resources from being indexed, only from being crawled, so the best solution is to use the x-robots-tag header, yet allow the search engines to crawl and find that header by leaving your robots.txt alone.

About /robots.txt In a nutshell. Web site owners use the /robots.txt file to give instructions about their site to web robots; this is called The Robots Exclusion Protocol.

A robots.txt file is a text file that resides on your server. It contains rules for indexing your website and is a tool to directly communicate with search engines. It contains rules for indexing your website and is a tool to directly communicate with search engines.

To maintain compatibility with robots that may deviate from the standard when processing robots.txt, the Crawl-delay directive needs to be added to the group that starts with the User-Agent record right after the Disallow and Allow directives).

Only use robots.txt (and robots metatags) to exclude files, pages and directories that are intended to be available to people but not to robots, such as duplicate pages, test pages and demos. Rule of thumb: If you want to restrict robots from entire websites and directories, use the robots.txt file.

A robots.txt file is a text file you can place on your website to instruct robots on where to craw, or more importantly where not to crawl. This is important because unless you want all of the pages& files in your site to show up online in search engine results, you will want to learn how to create a robots.txt file. – rob lowe love life pdf 6/07/2018 · On my website people can convert documents to PDF using the Print-PDF module. That module saves the files in a cache folder. How do I prevent search engines from indexing this folder and the PDF files in it? I have used the Disallow option to exclude the folder and extension in robots.txt file, but it’s not working for me. I don’t want to put a

To disallow indexing, you could use the HTTP header X-Robots-Tag with the noindex parameter. In that case, you should not block crawling of the file in robots.txt, otherwise bots would never be able to see your headers (and so they would never know that you don’t want this file to get indexed).

Old robots.txt files—Check Them! Depending on when you originally installed your Joomla site, there may be entries in the robots.txt file that should not be there. At the time the site was installed, the file was probably perfectly fine, but search engine optimization (SEO) is constantly evolving, and you may be stuck with the old configuration.

The structure of a robots.txt is simple. it is an endless list of user agents and disallowed files and directories. “ User-agent:” are search engines’ crawlers or bots and “ disallow:” lists the files /directories to be excluded from indexing.

Note that directives in the robots.txt file are instructions only: malicious crawlers will just ignore your robots.txt file and crawl any part of your site that is not protected, so Disallow should not be used in place of robust security measures.

Robots.txt file is small but very crucial for the Search engine to crawl any website. Creating wrong Robots.txt file can badly affect appearance of your web pages search result. Therefore, it’s important that we understand the purpose of robot.txt file and should learn how to check you are using it correctly.

This file must be accessible via HTTP on the local URL “/robots.txt”. The contents of this file are specified below . This approach was chosen because it can be easily implemented on any existing WWW server, and a robot can find the access policy with only a single document retrieval.

Webmasters use robots.txt files to help search engines index the content of their websites. With the help of this file webmasters can tell the search engine spiders not to crawl the pages that they do not consider important enough to be crawled, such as pdf files, printable version of pages and many more. In this way they get better opportunity to have important pages featured in search engine

Disallow. The second part of robots.txt is the disallow line. This directive tells spiders which pages they aren’t allowed to crawl. You can have multiple disallow …

The Robots Exclusion Standard was developed in 1994 so that website owners can advise search engines how to crawl your website. It works in a similar way as the robots meta tag which I discussed in great length recently.

The robots.txt file can simply be created using a text editor. Every file consists of two blocks. First, one specifies the user agent to which the instruction should apply, then follows a “Disallow” command after which the URLs to be excluded from the crawling are listed.

What Is Robots.txt? Siamak Kalhor Consulting

robots.txt file google.com

Preventing Public Search Engines from Spidering PDF Files

robots.txt Springer for Research & Development

robots.txt File html-5.com

Robots.txt File What Is It? How to Use It? // WEBRIS

Robots.txt Generator McAnerin International Inc

How To Customise Your Robots.txt File Create.net

– Importance of Robots.txt file Webplanners Blog

File Robots.txt là gì? Cách cấu hình robots.txt tốt nhất

How To Use The robots.txt File To Improve Results

Security Tip for robots.txt file Joomla! Forum

My robots.txt file is in the SERPS Google SEO News and

The Robots.txt File Kevin Muldoon

I have several PDF files uploaded on WordPress (via Media Uploader), that should not be indexed by Google. The only solution I could find was to add the filenames on my robots.txt, denying robots to crawl these files.

Even though the robots.txt file was invented to tell search engines what pages not to crawl, the robots.txt file can also be used to point search engines to the XML sitemap. This is supported by Google, Bing, Yahoo and Ask.

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are …

Only use robots.txt (and robots metatags) to exclude files, pages and directories that are intended to be available to people but not to robots, such as duplicate pages, test pages and demos. Rule of thumb: If you want to restrict robots from entire websites and directories, use the robots.txt file.

robots.txt File html-5.com

Robots.txt how to disallow certain parts of the site

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are …

To disallow indexing, you could use the HTTP header X-Robots-Tag with the noindex parameter. In that case, you should not block crawling of the file in robots.txt, otherwise bots would never be able to see your headers (and so they would never know that you don’t want this file to get indexed).

3: Robots.txt Maker; Allow and Disallow folders and files and more. Built-In FTP Access. Now you can easily notify robots which of your files and directories to include and exclude from indexing.

The Robots.txt file is one of the easiest files to understand. The four main properties used in Robots.txt are User-agent, Disallow, Allow and Sitemap (though other properties exist such as Deny, Crawl-delay and Request-rate).

The robots.txt file can simply be created using a text editor. Every file consists of two blocks. First, one specifies the user agent to which the instruction should apply, then follows a “Disallow” command after which the URLs to be excluded from the crawling are listed.

Robots.txt disallow It’s very important to know that the “Disallow” command in your WordPress robots.txt file doesn’t function exactly same as the noindex meta tag on a page’s header. Your robots.txt blocks crawling, but not necessarily indexing with the exception of website files such as images and documents. Search engines still can

The Robots Exclusion Standard was developed in 1994 so that website owners can advise search engines how to crawl your website. It works in a similar way as the robots meta tag which I discussed in great length recently.

My robots.txt file is in the SERPS Google SEO News and

php Add pdf files to robots.txt on WordPress – Stack

From what I can see, Google shows 182,000 robot.txt files in their index – [inurl:robots.txt filetype:txt] is the query I was studying. By posting that specific query, I am making an exception to our usual policy of “no specific searches”, but I feel it is generic enough – there are no keywords involved – to allow an exception in this case.

A Robots.txt file is a text file associated with your website that is used to tell the search engines which of your website’s pages you would and would not like them to visit.

The structure of a robots.txt is simple. it is an endless list of user agents and disallowed files and directories. “ User-agent:” are search engines’ crawlers or bots and “ disallow:” lists the files /directories to be excluded from indexing.

For example, if you want to close all PDF files on your website, you have to write the following instruction in Robots.txt: Disallow: /*.pdf$ An asterisk before the file extension means any sequence of characters (any name), and the dollar sign at the end indicates that the index prohibition applies only to files ending with .pdf .

I moved the /files/ disallow to the bottom and ran a test on one PDF file in the files directory and it returned Success. How can I fix this issue? We do not want anything in this directory being indexed.

User-agent: * Disallow: /search Allow: /search/about Allow: /search/static Allow: /search/howsearchworks Disallow: /sdch Disallow: /groups Disallow: /index.html?

# # robots.txt # User-agent: * Crawl-delay: 10 # Directories Disallow: /includes/ Disallow: /misc/ Disallow: /modules/ Disallow: /profiles/ Disallow: /scripts

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are …

The robots.txt file is one of the main ways of telling a search engine where it can and can’t go on your website. All major search engines support the basic functionality it offers, but some of them respond to some extra rules which can be useful too.

Using your robots.txt file to disallow specific files or folders is the simplest, is the most basic way to use it. However, you can get more precise and efficient in your code by making use of the wildcard in the disallow …

19/04/2018 · A Robots.txt file is a special text file that is always located in your Web server’s root directory. This file contains restrictions for Web Spiders, telling them where they have permission to search. It should be noted that Web Robots are not required to respect Robots.txt files, but most well-written Web Spiders follow the rules you define.

The robots.txt file can simply be created using a text editor. Every file consists of two blocks. First, one specifies the user agent to which the instruction should apply, then follows a “Disallow” command after which the URLs to be excluded from the crawling are listed.

The Robots.txt file is one of the easiest files to understand. The four main properties used in Robots.txt are User-agent, Disallow, Allow and Sitemap (though other properties exist such as Deny, Crawl-delay and Request-rate).

Old robots.txt files—Check Them! Depending on when you originally installed your Joomla site, there may be entries in the robots.txt file that should not be there. At the time the site was installed, the file was probably perfectly fine, but search engine optimization (SEO) is constantly evolving, and you may be stuck with the old configuration.

What Is Robots.txt? Siamak Kalhor Consulting

Robots.txt file Blogging Wizard

# # robots.txt # User-agent: * Crawl-delay: 10 # Directories Disallow: /includes/ Disallow: /misc/ Disallow: /modules/ Disallow: /profiles/ Disallow: /scripts

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are …

13/09/2018 · The robots.txt file must be in the top-level directory of the host, accessible though the appropriate protocol and port number. Generally accepted protocols for robots.txt (and crawling of websites) are “http” and “https”. On http and https, the robots.txt file is fetched using a HTTP non-conditional GET request.

28/11/2010 · I’ve modified my robots.txt file to tell search engines to ignore the login screen, download able files, confirmation screens ext. Things that I don’t want searchable because there’s no need for it to on a search engine really.

3: Robots.txt Maker; Allow and Disallow folders and files and more. Built-In FTP Access. Now you can easily notify robots which of your files and directories to include and exclude from indexing.

robots.txt is a text file that’s stored in the root directory of a domain. By blocking some or all search robots from selected parts of a site, these files allow website operators to control search engines’ access to websites.

Old robots.txt files—Check Them! Depending on when you originally installed your Joomla site, there may be entries in the robots.txt file that should not be there. At the time the site was installed, the file was probably perfectly fine, but search engine optimization (SEO) is constantly evolving, and you may be stuck with the old configuration.

robots.txt does not prevent resources from being indexed, only from being crawled, so the best solution is to use the x-robots-tag header, yet allow the search engines to crawl and find that header by leaving your robots.txt alone.

Even though the robots.txt file was invented to tell search engines what pages not to crawl, the robots.txt file can also be used to point search engines to the XML sitemap. This is supported by Google, Bing, Yahoo and Ask.

Proper SEO and the Robots.txt File Search Engine Watch

How To Correctly Setup & Configure Robots.txt In OpenCart

Bạn đã cấu hình file robots.txt của mình đúng hay chưa? Bài viết này sẽ giúp bạn có một cái nhìn đúng về robots.txt là gì? và cấu hình cho phù hợp, hạn chế …

robots.txt is a text file that’s stored in the root directory of a domain. By blocking some or all search robots from selected parts of a site, these files allow website operators to control search engines’ access to websites.

Now, Create ‘robots.txt’ file at your root directory. Copy above text and paste into the text file. Robots.txt Generator generates a file that is very much opposite of the sitemap which indicates the pages to be included, therefore, robots.txt syntax is of great significance for any website.

This file must be accessible via HTTP on the local URL “/robots.txt”. The contents of this file are specified below . This approach was chosen because it can be easily implemented on any existing WWW server, and a robot can find the access policy with only a single document retrieval.

It seems to imply that you can you use Noindex: directives in addition to the standard Disallow: directives in robots.txt. Disallow: /page-one.html Noindex: /page-two.html Seems like it would prevent search engines from crawling page one, and prevent them from indexing page two.

Disallow. The second part of robots.txt is the disallow line. This directive tells spiders which pages they aren’t allowed to crawl. You can have multiple disallow …

6/07/2018 · On my website people can convert documents to PDF using the Print-PDF module. That module saves the files in a cache folder. How do I prevent search engines from indexing this folder and the PDF files in it? I have used the Disallow option to exclude the folder and extension in robots.txt file, but it’s not working for me. I don’t want to put a

The structure of a robots.txt is simple. it is an endless list of user agents and disallowed files and directories. “ User-agent:” are search engines’ crawlers or bots and “ disallow:” lists the files /directories to be excluded from indexing.

For example, if you want to close all PDF files on your website, you have to write the following instruction in Robots.txt: Disallow: /*.pdf$ An asterisk before the file extension means any sequence of characters (any name), and the dollar sign at the end indicates that the index prohibition applies only to files ending with .pdf .

How To Correctly Setup & Configure Robots.txt In OpenCart

robots.txt file google.com

The robots.txt file can simply be created using a text editor. Every file consists of two blocks. First, one specifies the user agent to which the instruction should apply, then follows a “Disallow” command after which the URLs to be excluded from the crawling are listed.

I have several PDF files uploaded on WordPress (via Media Uploader), that should not be indexed by Google. The only solution I could find was to add the filenames on my robots.txt, denying robots to crawl these files.

The robots.txt file is one of the main ways of telling a search engine where it can and can’t go on your website. All major search engines support the basic functionality it offers, but some of them respond to some extra rules which can be useful too.

Only use robots.txt (and robots metatags) to exclude files, pages and directories that are intended to be available to people but not to robots, such as duplicate pages, test pages and demos. Rule of thumb: If you want to restrict robots from entire websites and directories, use the robots.txt file.

3: Robots.txt Maker; Allow and Disallow folders and files and more. Built-In FTP Access. Now you can easily notify robots which of your files and directories to include and exclude from indexing.

The Robots Exclusion Standard was developed in 1994 so that website owners can advise search engines how to crawl your website. It works in a similar way as the robots meta tag which I discussed in great length recently.

In another case, a SEO company edited the robots.txt file to disallow indexing of all parts of a website after the site’s owner stopped paying the SEO company. I also remember reviewing a company’s website and noticing that several directories that were part of their former site were disallowed in their robots.txt file.

The structure of a robots.txt is simple. it is an endless list of user agents and disallowed files and directories. “ User-agent:” are search engines’ crawlers or bots and “ disallow:” lists the files /directories to be excluded from indexing.

My robots.txt file is in the SERPS Google SEO News and

One of these tools is a robots.txt checker, which you can use by logging into your console and navigating to the robots.txt Tester tab: Inside, you’ll find an editor field where you can add your WordPress robots.txt file code, and click on the Submit button right below.

Importance of Robots.txt file Webplanners Blog

robots.txt Springer for Research & Development

The Ultimate Robots.txt Guide to Improve Crawl Efficiency

Robots.txt file is small but very crucial for the Search engine to crawl any website. Creating wrong Robots.txt file can badly affect appearance of your web pages search result. Therefore, it’s important that we understand the purpose of robot.txt file and should learn how to check you are using it correctly.

robots.txt File HTML 5

Preventing Public Search Engines from Spidering PDF Files

In short, a Robots.txt file controls how search engines access your website. This text file contains “directives” which dictate to search engines which pages are to “Allow” and “Disallow…

Robots.txt Generator Generate robots.txt file instantly

WordPress Robots.txt Guide What It Is and How to Use It

Webmasters use robots.txt files to help search engines index the content of their websites. With the help of this file webmasters can tell the search engine spiders not to crawl the pages that they do not consider important enough to be crawled, such as pdf files, printable version of pages and many more. In this way they get better opportunity to have important pages featured in search engine

The Ultimate Robots.txt Guide to Improve Crawl Efficiency

WordPress Robots.txt File Beginner’s Guide DH

robots.txt File html-5.com

6/07/2018 · On my website people can convert documents to PDF using the Print-PDF module. That module saves the files in a cache folder. How do I prevent search engines from indexing this folder and the PDF files in it? I have used the Disallow option to exclude the folder and extension in robots.txt file, but it’s not working for me. I don’t want to put a

Disallow pdf Sitemaps Meta Data and robots.txt forum

To disallow indexing, you could use the HTTP header X-Robots-Tag with the noindex parameter. In that case, you should not block crawling of the file in robots.txt, otherwise bots would never be able to see your headers (and so they would never know that you don’t want this file to get indexed).

The Ultimate Robots.txt Guide to Improve Crawl Efficiency

Robots.txt file serves to provide valuable data to the search systems scanning the Web. Before examining the pages of your site, the searching robots perform verification of this file. Explore how to test robots.txt with Google Webmasters. Сheck the indexability of a particular URL on your website.

Optimize WordPress Robots.txt Prevent Direct Access

29/01/2015 · The bing developer forums on MSDN were closed and support moved to this forum, I think around 6 months ago. But then this issue has nothing to do with Bing development.

The Robots.txt File Kevin Muldoon

A robots.txt file is a text file that resides on your server. It contains rules for indexing your website and is a tool to directly communicate with search engines. It contains rules for indexing your website and is a tool to directly communicate with search engines.

Robots.txt Specifications Search Google Developers

Disallow. The second part of robots.txt is the disallow line. This directive tells spiders which pages they aren’t allowed to crawl. You can have multiple disallow …

File Robots.txt là gì? Cách cấu hình robots.txt tốt nhất

Proper SEO and the Robots.txt File Search Engine Watch

I have several PDF files uploaded on WordPress (via Media Uploader), that should not be indexed by Google. The only solution I could find was to add the filenames on my robots.txt, denying robots to crawl these files.

The fastest robots.txt testing tool Ryte Inc.

Robots.txt File What Is It? How to Use It? // WEBRIS

Robots.txt how to disallow certain parts of the site

Webmasters use robots.txt files to help search engines index the content of their websites. With the help of this file webmasters can tell the search engine spiders not to crawl the pages that they do not consider important enough to be crawled, such as pdf files, printable version of pages and many more. In this way they get better opportunity to have important pages featured in search engine

Robots.txt File Explained Allow or Disallow All or Part

How to Write a Robots.txt File support.microsoft.com

robots.txt is a text file that’s stored in the root directory of a domain. By blocking some or all search robots from selected parts of a site, these files allow website operators to control search engines’ access to websites.

Disallow pdf Sitemaps Meta Data and robots.txt forum

Now, Create ‘robots.txt’ file at your root directory. Copy above text and paste into the text file. Robots.txt Generator generates a file that is very much opposite of the sitemap which indicates the pages to be included, therefore, robots.txt syntax is of great significance for any website.

What Is a Robots.txt File KeyCDN Support

Robots.txt disallow It’s very important to know that the “Disallow” command in your WordPress robots.txt file doesn’t function exactly same as the noindex meta tag on a page’s header. Your robots.txt blocks crawling, but not necessarily indexing with the exception of website files such as images and documents. Search engines still can

How to Create a Robots txt File for Your Blog or Website

Robots.txt Generator McAnerin International Inc

How to stop PDF files from being indexed by search engines

Some of the Internet’s most important pages from many of the most linked-to domains, are blocked by a robots.txt file. Does your website misuse the robots.txt file, too? Find out how search engines really treat robots.txt blocked files, entertain yourself with a few seriously flawed live examples, and learn how to avoid the same mistakes yourself.

Robots.txt File What Is It? How to Use It? // WEBRIS

Robots.txt File Explained Allow or Disallow All or Part

robots.txt File. You can use robots.txt to disallow search engine crawling of specific directories or pages on your web site. Patterns in the robots.txt file are matched against the …

The Robots.txt File Kevin Muldoon

Using your robots.txt file to disallow specific files or folders is the simplest, is the most basic way to use it. However, you can get more precise and efficient in your code by making use of the wildcard in the disallow …

Robots.txt File Explained Allow or Disallow All or Part

php Google is ignoring my robots.txt – Stack Overflow

One of these tools is a robots.txt checker, which you can use by logging into your console and navigating to the robots.txt Tester tab: Inside, you’ll find an editor field where you can add your WordPress robots.txt file code, and click on the Submit button right below.

robots.txt File HTML 5

Importance of Robots.txt file Webplanners Blog

Disallow: /ebooks/*.pdf → In connection with the first line, this link means that all web crawlers should not crawl any pdf files in the ebooks folder within this website. This means search engines won’t include these direct PDF links in search results.

robots.txt file google.com

The fastest robots.txt testing tool Ryte Inc.

The Importance of a Robots.txt File Hallam Internet

You might be surprised to hear that one small text file, known as robots.txt, could be the downfall of your website. If you get the file wrong you could end up telling search engine robots not to crawl your site, meaning your web pages won’t appear in the search results.

Robots.txt Generator Generate robots.txt file instantly

Optimize WordPress Robots.txt Prevent Direct Access

How To Use The robots.txt File To Improve Results

6/07/2018 · On my website people can convert documents to PDF using the Print-PDF module. That module saves the files in a cache folder. How do I prevent search engines from indexing this folder and the PDF files in it? I have used the Disallow option to exclude the folder and extension in robots.txt file, but it’s not working for me. I don’t want to put a

Index management with the robots.txt file 1&1 IONOS

The Importance of a Robots.txt File Hallam Internet

Preventing Public Search Engines from Spidering PDF Files

The robots.txt file is one of the main ways of telling a search engine where it can and can’t go on your website. All major search engines support the basic functionality it offers, but some of them respond to some extra rules which can be useful too.

The Importance of a Robots.txt File Hallam Internet

Disallow. The second part of robots.txt is the disallow line. This directive tells spiders which pages they aren’t allowed to crawl. You can have multiple disallow …

Security Tip for robots.txt file Joomla! Forum

Bạn đã cấu hình file robots.txt của mình đúng hay chưa? Bài viết này sẽ giúp bạn có một cái nhìn đúng về robots.txt là gì? và cấu hình cho phù hợp, hạn chế …

The Importance of a Robots.txt File Hallam Internet

WordPress Robots.txt Guide What It Is and How to Use It

Robots.txt disallow It’s very important to know that the “Disallow” command in your WordPress robots.txt file doesn’t function exactly same as the noindex meta tag on a page’s header. Your robots.txt blocks crawling, but not necessarily indexing with the exception of website files such as images and documents. Search engines still can

Robots.txt file Blogging Wizard

The fastest robots.txt testing tool Ryte Inc.

robots.txt the ultimate guide Yoast

robots.txt does not prevent resources from being indexed, only from being crawled, so the best solution is to use the x-robots-tag header, yet allow the search engines to crawl and find that header by leaving your robots.txt alone.

Robots.txt Generator Generate robots.txt file instantly

robots.txt Springer for Research & Development

Importance of Robots.txt file Webplanners Blog

3: Robots.txt Maker; Allow and Disallow folders and files and more. Built-In FTP Access. Now you can easily notify robots which of your files and directories to include and exclude from indexing.

How to Write a Robots.txt File support.microsoft.com

Disallow. The second part of robots.txt is the disallow line. This directive tells spiders which pages they aren’t allowed to crawl. You can have multiple disallow …

robots.txt file google.com

Serious Robots.txt Misuse & High Impact Solutions Why

This file must be accessible via HTTP on the local URL “/robots.txt”. The contents of this file are specified below . This approach was chosen because it can be easily implemented on any existing WWW server, and a robot can find the access policy with only a single document retrieval.

php Google is ignoring my robots.txt – Stack Overflow

Note that directives in the robots.txt file are instructions only: malicious crawlers will just ignore your robots.txt file and crawl any part of your site that is not protected, so Disallow should not be used in place of robust security measures.

Preventing Public Search Engines from Spidering PDF Files

Index management with the robots.txt file 1&1 IONOS

Optimize WordPress Robots.txt Prevent Direct Access

Webmasters use robots.txt files to help search engines index the content of their websites. With the help of this file webmasters can tell the search engine spiders not to crawl the pages that they do not consider important enough to be crawled, such as pdf files, printable version of pages and many more. In this way they get better opportunity to have important pages featured in search engine

The fastest robots.txt testing tool Ryte Inc.

The Ultimate Robots.txt Guide to Improve Crawl Efficiency

3: Robots.txt Maker; Allow and Disallow folders and files and more. Built-In FTP Access. Now you can easily notify robots which of your files and directories to include and exclude from indexing.

How to stop PDF files from being indexed by search engines

Robots.txt Specifications Search Google Developers

For example, if you want to close all PDF files on your website, you have to write the following instruction in Robots.txt: Disallow: /*.pdf$ An asterisk before the file extension means any sequence of characters (any name), and the dollar sign at the end indicates that the index prohibition applies only to files ending with .pdf .

Optimize WordPress Robots.txt Prevent Direct Access

How To Use The robots.txt File To Improve Results

In this case, you should not disallow the page in robots.txt, because the page must be crawled in order for the tag to be seen and obeyed. Set X-Robots-Tag header with noindex for all files in the folders.

Robots.txt how to disallow certain parts of the site

robots.txt the ultimate guide Yoast

Robots.txt disallow It’s very important to know that the “Disallow” command in your WordPress robots.txt file doesn’t function exactly same as the noindex meta tag on a page’s header. Your robots.txt blocks crawling, but not necessarily indexing with the exception of website files such as images and documents. Search engines still can

Robots.txt Specifications Search Google Developers

Robots.txt File What Is It? How to Use It? // WEBRIS

WordPress Robots.txt Guide What It Is and How to Use It

The default prestashop robots.txt contains the directive : Disallow: */modules/ However, this is causing the top banner and image slider images to be blocked. These images reside in sub folders of the theme configurator and image slider modules.

WordPress SEO Creating Robots.txt File. Using Meta Robots

# # robots.txt # User-agent: * Crawl-delay: 10 # Directories Disallow: /includes/ Disallow: /misc/ Disallow: /modules/ Disallow: /profiles/ Disallow: /scripts

Robots.txt Allow Two Subfolders in a Disallowed Folder

robots.txt file google.com

robots.txt the ultimate guide Yoast

Only use robots.txt (and robots metatags) to exclude files, pages and directories that are intended to be available to people but not to robots, such as duplicate pages, test pages and demos. Rule of thumb: If you want to restrict robots from entire websites and directories, use the robots.txt file.

php Google is ignoring my robots.txt – Stack Overflow

Serious Robots.txt Misuse & High Impact Solutions Why

Optimize WordPress Robots.txt Prevent Direct Access

The robots.txt file is one of the main ways of telling a search engine where it can and can’t go on your website. All major search engines support the basic functionality it offers, but some of them respond to some extra rules which can be useful too.

Robots.txt Allow Two Subfolders in a Disallowed Folder

Robots.txt file Blogging Wizard

robots.txt file google.com

Webmasters use robots.txt files to help search engines index the content of their websites. With the help of this file webmasters can tell the search engine spiders not to crawl the pages that they do not consider important enough to be crawled, such as pdf files, printable version of pages and many more. In this way they get better opportunity to have important pages featured in search engine

robots.txt File html-5.com

The Complete Guide to WordPress robots.txt (And How to Use

Proper SEO and the Robots.txt File Search Engine Watch

Robots.txt file serves to provide valuable data to the search systems scanning the Web. Before examining the pages of your site, the searching robots perform verification of this file. Explore how to test robots.txt with Google Webmasters. Сheck the indexability of a particular URL on your website.

Robots.txt Generator McAnerin International Inc

Robots.txt Allow Two Subfolders in a Disallowed Folder

robots.txt is a text file that’s stored in the root directory of a domain. By blocking some or all search robots from selected parts of a site, these files allow website operators to control search engines’ access to websites.

Disallow Free Download Disallow Software

My robots.txt file is in the SERPS Google SEO News and

Bạn đã cấu hình file robots.txt của mình đúng hay chưa? Bài viết này sẽ giúp bạn có một cái nhìn đúng về robots.txt là gì? và cấu hình cho phù hợp, hạn chế …

A Standard for Robot Exclusion The Web Robots Pages

Allow only one file of directory in robots.txt? Stack

php Add pdf files to robots.txt on WordPress – Stack

ok, so I remove the pdf files using robots.txt and or GWMT. (current tests show this is not yet working and it is a couple of weeks along – but hey, I can wait.)

WordPress Robots.txt File Beginner’s Guide DH